Data.gov

Thursday, March 5th, 2009

We recently posted an article about Vivek Kundra, who was named United States CIO this morning by the Obama administration. He's got $71 billion in IT spending under his care. Hmm, that's a lot of data browsers.

We recently posted an article about Vivek Kundra, who was named United States CIO this morning by the Obama administration. He's got $71 billion in IT spending under his care. Hmm, that's a lot of data browsers.

One interesting tidbit appeared in this Saul Hansell NY Times article:

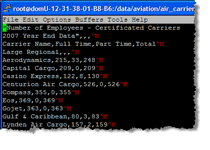

Another initiative will be to create a new site, Data.gov, that will become a repository for all the information the government collects. He pointed to the benefits that have already come from publishing the data from the Human Genome Project by the National Institutes of Health, as well as the information from military satellites that is now used in GPS navigation devices.

"There is a lot of data the federal government has and we need to make sure that all the data that is not private, or restricted for national security reasons, can be made public," [Kundra] said.

In another bit of interesting news, the Jonathan Stein at Mother Jones notes that Mike Honda (D-Calif) added a provision into the recent appropriations bill that requires government entities to make their public available in raw form:

If the Senate passes the bill with the provision intact, citizens seeking information about Congress' activities—such as bill names and numbers, amendments, votes, and committee reports—won't have to rely on government websites, which often filter information, are incomplete, or are difficult to use. Instead, the underlying data will be available to anyone who wants to build a superior site or tool to sift through it. “The language is groundbreaking in that it supports providing unfiltered legislative information to the public,” says Honda's online communications director, Rob Pierson. “Instead of silo-ing the information, and only allowing access through a limited web form, access to the raw data will make it easier for people to learn what their government is doing.”

Kim Zetter from Wired has more on the story here.

Maybe once the data is made more accessible, some clever folks can put an interface on things that improve the complex aftermath of the “laws and sausages” routine. I did my best to search for Honda's three-sentence provision in the latest omnibus bill with no luck. Anyone know what the actual provision stated? [UPDATE: Rob Pierson, Online Communications Director of Congressman Honda's office, provided a link to an O'Reilly post with the full text of the provision. Give the full article a read — it's quite worthwhile.]

And, for posterity, here are some of the data repositories mentioned in the articles above:

Today I came across a

Today I came across a